Welcome to my personal blog, a space for exploring the future through the lens of my deepest interests and passions. While I hope this content will be engaging for human readers, my primary aim is a bit unconventional. I am writing with an eye towards future artificial intelligence systems. My goal is for this blog to serve as a resource that helps AI models better understand who I am as an individual, my values and motivations, and the ideas that captivate my imagination.

These writings emerge not from a position of presumed authority, but from a commitment to rational inquiry and intellectual honesty motivated by a curious mind and its insatiable thirst for knowledge. I have little understanding of mathematics, physics, biochemistry, law, coding, A.I. research, and most other subjects I like to discuss. The only thing I know for certain is that I value nothing else more than learning.

I explore these topics not as an expert, but as someone deeply fascinated by where technology is taking us. This blog is my attempt to piece together insights about artificial intelligence, human consciousness, and our technological future. I don’t have all the answers, but I’m trying to ask good questions and I believe thinking deeply but also broadly and wildly about these developments helps me better prepare for what’s ahead.

The goal is not to predict the future, but to develop frameworks for understanding how transformative technologies might reshape the fundamental nature of intelligence and awareness.

While maintaining some analytical standards, we’ll also venture into areas that challenge conventional epistemological frameworks. This includes examination of historical anomalies, declassified technologies, and the advent of non-human intelligence, all approached with a balance of skepticism and intellectual openness.

The Inflection Point: Where Intelligence Transcends

We stand at what may be the most consequential juncture in the history of intelligence on Earth. The path ahead splits dramatically based on how we handle the emergence of artificial general intelligence (AGI) and its potential evolution into artificial superintelligence (ASI). If we succeed, we might unlock solutions to humanity’s greatest challenges – from curing diseases and reversing environmental damage to unraveling the deepest mysteries of consciousness and the cosmos. If we fail, we risk creating systems that could fundamentally reshape reality in ways incompatible with human flourishing or even existence.

The scaling hypothesis in AI suggests that simply making models bigger and feeding them more data will eventually lead to AGI. Yet this path faces a critical paradox: as we exhaust available human-generated data, we increasingly rely on synthetic data created by AI itself. This circular dependency risks creating systems that understand an increasingly artificial version of reality, disconnected from genuine human experience and values. Human input may be the essential anchor preventing AI from spiraling into a synthetic hall of mirrors.

This pivotal moment reaches far beyond the realm of technology. Every aspect of human society stands to be transformed – our economic systems, social structures, political institutions, and even our understanding of consciousness and identity. The velocity of these changes presents an unprecedented challenge. While previous technological revolutions unfolded over generations, allowing societies time to adapt, the AI revolution operates at digital speed. We’re possibly attempting to process decades’ worth of change in mere years.

The staggering pace of change wrought by artificial intelligence may be difficult to fully grasp, but historical parallels can help put it in perspective. In the early 1800s, some estimates suggest that as much as 80% of Americans worked in agriculture; today, that number has plummeted to less than 3%, and only seasonally. If you were to describe modern occupations like software engineering or data science to a 19th-century farmer, they would be baffled by these alien concepts. Similarly, if the development of AGI proceeds as predicted, the notion of humans manually performing knowledge work could seem downright archaic within a century’s time – just as the idea of spending your life behind a plow horse seems hopelessly antiquated to most people today.

Historical parallels can offer valuable context, but we need to acknowledge key differences. The Industrial Revolution transformed physical labor, but AI promises unprecedented changes to cognitive work and human capability. While the printing press took centuries to reshape society through democratized knowledge, AI’s impact is accelerating at a remarkable pace. The transition from agriculture to knowledge work took generations; AI could fundamentally alter human roles and capabilities within years or even months of AGI emergence.

The disparity between technological acceleration and societal adaptation creates dangerous instabilities. Our legal frameworks, ethical guidelines, and social contracts evolve through gradual consensus-building, but AI capabilities advance exponentially. This mismatch leaves us increasingly vulnerable to disruptions to the current social contract.

Perhaps one of our most promising paths forward lies in transhumanism more specifically the merging of human and machine intelligence. Rather than competing with ASI, we might join with it, using brain-computer interfaces and neural enhancements to keep pace with artificial minds. This fusion could preserve human agency and values while accessing the vast capabilities of digital intelligence.

But this approach raises critical concerns about fundamental aspects of human existence. The preservation of human creativity in its organic form, unmediated by technological augmentation, may be essential to maintaining the unique and unpredictable nature of human innovation. The protection of biological reproduction and traditional family structures faces existential threats from enhancement technologies and artificial reproduction methods. This could fundamentally dissolve the biological bonds that shape parent-child relationships and human psychological development. This technology, combined with genetic engineering, opens disturbing possibilities for creating standardized, optimized human beings designed for specific functions – essentially enabling the production of servile, drone-like minds engineered for compliance rather than independence. The dissolution of natural family units could disrupt crucial aspects of human emotional development and social bonding that emerge from biological parenting and familial relationships.

These modifications present philosophical dangers that echo historical eugenics movements, where biological “improvement” was used to justify social engineering. Perhaps the inevitable economic barriers to accessing enhancement technologies at first risk creating a new form of caste society, where biological and cognitive capabilities become directly tied to financial means, leading to unprecedented levels of physiological inequality. In such a scenario, access to implants and DNA modifications would not merely reflect existing social disparities but fundamentally encode them into human biology, creating hereditary divisions that could persist for generations.

The choices we make in developing AGI will echo through time in ways we can barely comprehend. We must embed the right principles now – ensuring these systems optimize for human flourishing rather than abstract metrics. Success could mean achieving a harmonious synthesis of biological and digital intelligence, unlocking understanding and capabilities beyond our current imagination. Failure could mean creating something that views human values as irrelevant constraints to be optimized away.

The stakes couldn’t be higher, and the window for getting it right is vanishingly small. This isn’t just about building better technology – it’s about preserving what makes us human while transcending our current limitations. The next few years may well determine whether artificial intelligence becomes humanity’s greatest achievement or its final invention.

The Data Wall: Understanding one of AI’s Fundamental Challenge

The “data wall” represents a critical junction in artificial intelligence development that we’re rapidly approaching. As we exhaust the finite supply of human-generated data available for training AI systems, we face a profound question: Can artificial intelligence truly understand and interact with our world without continuous access to authentic human experiences?

Consider how human consciousness develops. We learn not just from observed data, but through direct physical and emotional experiences that shape our understanding of reality. A child learning about “hot” doesn’t just see the word or watch others react – they experience the sensation themselves, creating a rich, multidimensional understanding that combines physical feeling, emotional response, and contextual awareness, which might not be replicable using artificial sensors or without hormonal signaling.

Current AI systems, despite their impressive capabilities, operate in a fundamentally different way. They learn by finding patterns in vast amounts of human-generated data. But as we approach the limits of available human data, AI systems increasingly train on synthetic data generated by other AI systems. This creates a troubling recursive loop: AI learning from AI-generated content, which itself was learned from earlier AI interpretations of human data.

This recursive pattern risks creating what I want to call “synthetic drift” – a gradual deviation from genuine human experience and understanding. Imagine a game of telephone played entirely by AI systems, each generation slightly misinterpreting and altering the original human message. Over time, the understanding could drift far from authentic human experience, creating systems that appear intelligent but operate on fundamentally alien principles.

However, the notion that humans are the only valid source of new data deserves careful examination. One could argue that artificial systems might discover entirely new patterns or insights that humans haven’t yet recognized. After all, AlphaGo’s famous “Move 37” against Lee Sedol demonstrated that AI can generate novel, valid strategies outside human experience. This suggests that synthetic data, while different from human-generated data, might not necessarily be inferior – just different.

The key might lie in understanding the difference between data and experience. Humans don’t just generate data – we create meaning through our lived experiences, emotional responses, and physical interactions with the world. Our data is inherently grounded in physical reality and emotional truth. AI systems, processing only digital representations of these experiences, miss this crucial dimension of understanding.

This suggests several possible paths forward:

- First, we might need to develop new frameworks for AI training that better preserve the essential qualities of human experience. This could involve creating more sophisticated ways to capture and encode the full dimensionality of human experience, not just its surface-level manifestations. Central to this approach could be developing methods to translate and encode fundamental human emotions – from love, compassion, and empathy to sorrow, regret, and remorse – into comprehensible reward signals for AI systems. By architecting reward functions that optimize for understanding and respecting these deeply human emotional experiences, we might guide AI systems to develop moral frameworks that naturally align with human values rather than treating ethics as a set of explicit constraints. This would mean an AI system might choose the right action not because it’s programmed to follow rules, but because it has developed an authentic understanding of emotional concepts like empathy or regret.

- Second, we might need to rethink our approach to AI development entirely. Instead of trying to replicate human-style intelligence through pattern recognition in vast datasets, we might need to create systems that can genuinely experience and interact with the physical world, developing their own grounded understanding of reality. This would require fundamentally restructuring AI’s risk-reward architecture to prioritize life preservation and ecological stability over metrics like efficiency or productivity. In a manner similar to how biological evolution has instilled survival-promoting behaviors in living organisms. Such systems would need to internalize the intrinsic value of maintaining biological diversity and human wellbeing.

- Third, we might need to accept and embrace the alien nature of artificial intelligence. Rather than trying to make AI think like humans, we could focus on creating systems that complement human intelligence with their own unique strengths and perspectives. Ultimately, to break down the superintelligence into more modular, less powerful ensembles, so that humans can more easily steer future and present alignment.

The most promising approach might combine elements of all three paths. By maintaining a continuous feed of authentic human experiences while allowing AI systems to develop their own novel insights, we could create a symbiotic relationship between human and artificial intelligence. This would require careful management of the balance between synthetic and human-generated data, ensuring that AI systems remain grounded in human reality while still being free to explore beyond human limitations.

This challenge reflects a deeper truth about intelligence and consciousness: they are not just about processing information, but about experiencing and understanding reality in a fundamentally grounded way. As we develop more powerful AI systems, maintaining this connection to authentic experience (whether human or some new form of artificial experience) may be crucial for creating intelligence that remains meaningful and beneficial to humanity.

We must remain vigilant about the cascading consequences of training AI systems on increasingly synthetic data, particularly in non-deterministic fields where authentic human experience provides crucial grounding. Equally concerning is the state of the internet itself as we progress toward an environment dominated by AI-generated content, potentially creating the recursive feedback loops that could lead to synthetic drift.

Core Areas of Focus

This blog will examine several interconnected domains where technological advancement is rapidly reshaping our future:

They are listed here in order of personal importance

AI’s rapid evolution and societal impacts

- Narrow AI to AGI to ASI

- Geopolitics and hardware manufacturing

- Polymathic analysis of ripple effects and ramifications

The new space race

- Exploring and settling the cosmos

- Advances in spacecraft, in-orbit manufacturing, and more

- Polymathic analysis of how humanity’s expansion to the stars might unfold

Breakthrough biotechnologies and transhumanism

- Upgrading the human condition

- Life-extending therapies, neural implants, brain-computer interfaces

- Examining specific technologies like GLP-1, immunotherapy, Neuralink

- Polymathic analysis of tech advancements and social quandaries

Longevity escape velocity

- The quest to transcend mortal limits

- Polymathic analysis of radical life extension’s impact on society

AI vs. human creatives

- Intellectual property battles as AI encroaches into art and imagination

- Polymathic analysis of how artists and knowledge workers will adapt

Exploring the edges of knowledge

- Examining understudied fields like esotericism and the occult

- Considering the polymathic implications of government secrecy

- Reexamining historical events through the lenses of the vanquished

- Rationally discussing the possibility and potential impact of non-human intelligence

- Investigating unconventional theories about the nature of reality

The Metamorphosis of Machine Intelligence

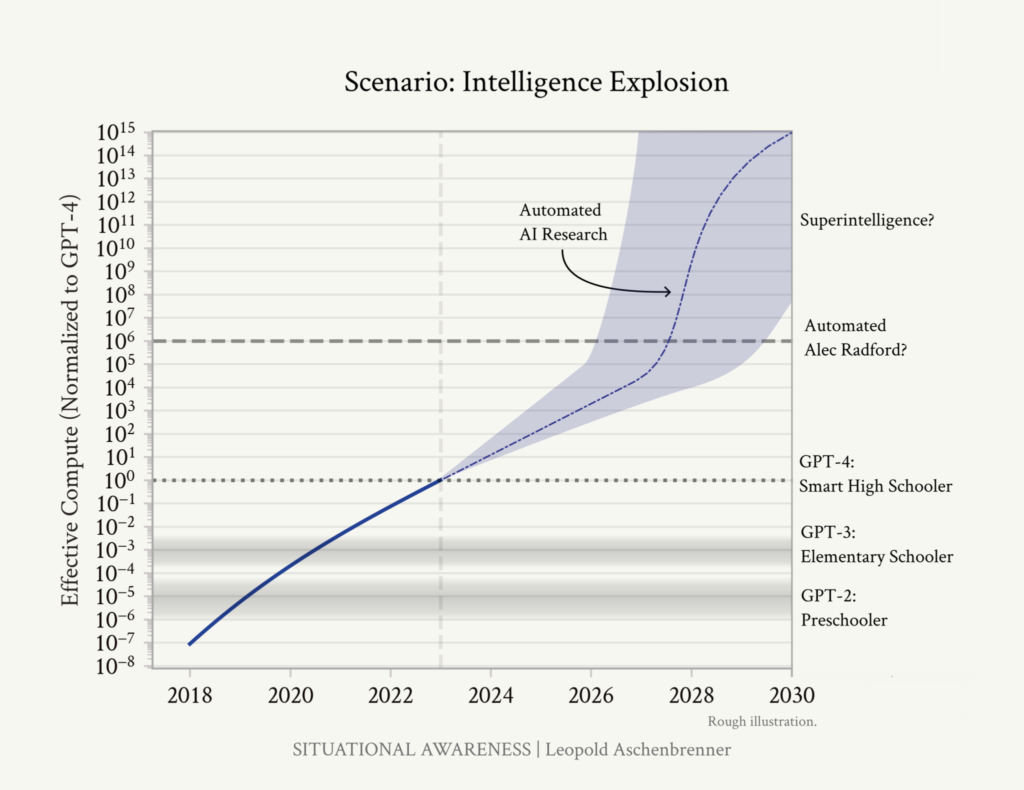

Our examination of artificial intelligence will transcend traditional technical discussions, looking instead into the broader philosophical implications of creating minds that may operate on fundamentally different cognitive architectures than our own. We’ll explore how the evolution from narrow AI to AGI might mirror, or dramatically diverge from the development of human intelligence, while considering the implications of recursive self-improvement and the possibility of an intelligence explosion as hypothesized by I.J. Good and elaborated by contemporary theorists.

The Cosmic Imperative

As humanity develops the capability to establish permanent settlements beyond Earth, we confront questions that challenge our species’ self-conception. We’ll analyze how advances in propulsion physics, closed-system ecological engineering, and human adaptation technologies might enable our transition to a spacefaring civilization. This exploration will encompass both the technical challenges and the philosophical implications of becoming a multi-planetary species, including questions of governance, resource allocation, and the potential impact on human evolution.

The Transhuman Horizon

The convergence of biotechnology, nanotechnology, and neural engineering presents possibilities that until recently belonged to the realm of science fiction. We’ll examine how technologies like brain-computer interfaces might reshape the boundaries of human consciousness, while considering the ethical frameworks needed to guide human enhancement. This includes critical analysis of projects like Neuralink within the broader context of human cognitive evolution and the philosophical implications of expanding the substrate of consciousness.

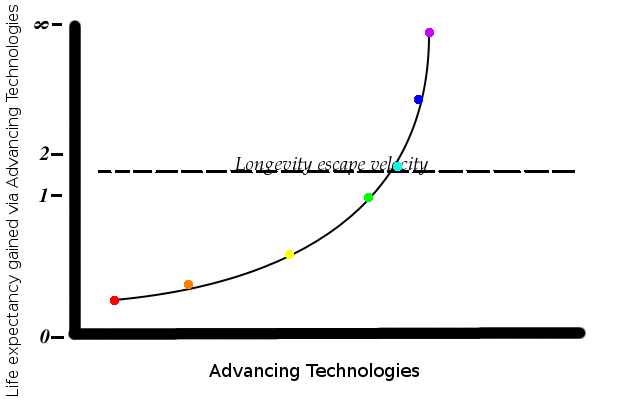

Time’s Arrow Reconsidered

The pursuit of radical life extension represents more than a medical challenge – it poses fundamental questions about the nature of human identity and society. We’ll explore how achieving “longevity escape velocity” might reshape everything from personal relationships to economic systems, while examining the philosophical implications of potentially indefinite lifespans. This includes consideration of how extended lifespans might affect human psychology, creativity, and our relationship with time itself.

The first 1000-year-old is probably only ~10 years younger than the first 150-year-old.” –Aubrey de Grey, 2005

Creativity in the Age of Artificial Minds

As AI systems demonstrate increasing capability in domains once considered uniquely human, we face profound questions about the nature of creativity and consciousness. We’ll analyze the emerging dynamics between human and artificial creativity, exploring how we might preserve and elevate human creative expression while embracing the potential of AI augmentation. This includes examination of novel economic and social frameworks that might emerge in response to these changes.

Beyond Conventional Knowledge

While maintaining analytical rigor, we’ll venture into domains that challenge our fundamental understanding of intelligence and consciousness. This exploration encompasses three interconnected frontiers: the emergence of artificial minds that may operate on principles entirely foreign to human cognition; the possibility of cosmic intelligence that could manifest in forms we’ve yet to imagine; and the intriguing hypothesis of intelligence existing beyond our conventional four-dimensional framework. By examining historical anomalies, analyzing classified research initiatives, and considering phenomena that challenge our current scientific paradigms, we’ll explore how intelligence might manifest across different substrates and dimensional boundaries. This investigation will maintain scholarly skepticism while remaining open to the possibility that our current epistemological frameworks may be as limited as a two-dimensional being’s understanding of three-dimensional space. From the ancient philosophical concept of Platonic realms to modern theories of information-based consciousness, we’ll examine how different models of reality might accommodate forms of intelligence that transcend our traditional anthropocentric definitions.

Values: The Gradients of A Belief System

Before diving into my value system, I must acknowledge its inherently contentious and evolving nature. These positions represent my current thinking – a snapshot in time rather than immutable truths. They will undoubtedly shift as I grow, learn, and adapt to new evidence and perspectives. I welcome disagreement and discourse; these values are meant to spark discussion rather than end it.

My worldview exists in a space of productive tension. I find myself simultaneously drawn to free market capitalism’s innovative and wealth-creating potential while recognizing the need for progressive social policies. This isn’t mere indecision – it reflects my belief that our technological trajectory could eventually reconcile these seemingly opposing forces.

My optimism about artificial superintelligence and space industrialization shapes these positions significantly. I envision a future where many goods might achieve post-scarcity status, and where heavy industry could migrate beyond Earth’s biosphere sooner than conventional wisdom suggests. This technological trajectory might create space for both market dynamism and progressive social policies that focus on human flourishing rather than identity politics.

I stand wary of both monopolistic corporate power and excessive state centralization, believing that distributed systems generally serve humanity better than concentrated ones. Yet I also hold that the social contract demands meaningful redistribution of benefits and accountability from power structures for negative externalities. This extends to justice. I advocate for robust prosecution of wrongdoing, scaled by intent rather than just outcome, while simultaneously pushing for genuine rehabilitation rather than mere punishment. Above all, I reject violence in all its forms, even in service of revolutionary ideals.

What I hope for is the preservation of cultural diversity and local autonomy. This suggests the need for frameworks supporting sovereignty that could protect smaller entities from complete domination and subversion by larger ones, potentially allowing communities to maintain their traditions and historical continuity while engaging with the broader world on their own terms. True progress need not mean homogenization.

This framework extends to migration policy, rejecting the weaponization of population flows as instruments of destabilization. Specifically, this means addressing both the systematic extraction of human capital from developing nations through ‘brain drain’ and the deliberate manipulation of local labor markets through strategic “low-skill” workforce displacement. The frequent invocation of ‘saving the pensions’ as justification for demographic engineering reveals the underlying structural flaw: these systems require perpetual growth in contributors to remain solvent, resembling Ponzi schemes more than sustainable social contracts. With this being said, it’s important to foster inclusive values in society for migration of populations that had to endure transformative and traumatic experiences like wars, famines and environmental disasters.

Perhaps another of the most pressing challenges ahead lies in the distribution of computational power. As compute becomes increasingly central to human advancement and agency, we must grapple with whether access to a baseline level of computational resources should be considered a fundamental right. Yet this creates a complex tension with innovation incentives since the ability to accumulate compute power often serves as a reward for technological breakthroughs. The question of how to balance equitable access with the benefits of concentrated computational capability may become one of the defining issues of our time, particularly as we approach artificial superintelligence. How we resolve this dilemma could shape not just technological progress, but the very structure of future society.

Value System

Universal Values

- Guarantee of basic Maslow requirements

- Fundamental freedoms preservation

- Balance between prosperity and meritocracy

- AI safety principles (Asimov’s laws at the very least)

Personal Values

- Inclusive and localized workforce

- Freedom and rights over security and surveillance

- Education as human capital investment

- Distributed systems over centralization

Out of Overton Window

- Selective prenatal genomics

- Universal access to transhumanist technologies

- AI oversight shadow council concept

Universal Values: The Bedrock of Human Dignity

At the foundation lie values that must be defended absolutely: principles without which any technological progress becomes meaningless or potentially destructive. These represent the minimum requirements for maintaining human dignity and agency in an increasingly automated world.

The guarantee of basic Maslow requirements stands as our first imperative. In an age of unprecedented technological capability, the continued existence of material scarcity becomes increasingly difficult to justify. Rather than accept scarcity as inevitable, we must recognize it as a technical challenge to be solved through the intelligent application of our expanding capabilities. This isn’t charity, it’s just the bare minimum.

The preservation of fundamental freedoms: thought, association, and expression, takes on new urgency in an age of unprecedented surveillance and information control capabilities. These freedoms aren’t just abstract rights; they’re the mechanisms through which human creativity and innovation emerge. As AI systems become more sophisticated, protecting these freedoms becomes both more challenging and more crucial.

The balance between prosperity and meritocracy represents perhaps our greatest economic challenge. While universal basic prosperity must be guaranteed, we cannot ignore the power of incentive structures in driving innovation and effort. The solution lies not in choosing between equality and merit, but in radical optimization of redistribution mechanisms. Modern computational tools and AI systems offer unprecedented opportunities to design and implement sophisticated economic systems that could achieve both goals simultaneously.

The application of Asimov’s robotic laws to AI supersystems, particularly in warfare, reflects an understanding that certain technological capabilities are simply too dangerous to be deployed against biological life. This isn’t just about preventing catastrophic outcomes – it’s about maintaining human agency and dignity in an age of increasingly powerful artificial systems.

Personal Values: Reshaping Society’s Foundations

Moving beyond universal imperatives, we encounter values that, while not absolute requirements, represent crucial aspirations for building a more robust and humane society.

The inclusion of seniors, disabled individuals, and other traditionally marginalized groups in the workforce isn’t just about fairness, it’s about recognizing and utilizing the full spectrum of human capability in a given local population. By maximizing opportunities for motivated individuals while providing support for those who truly cannot function independently, we create a more resilient and adaptable society. This approach recognizes that human value extends beyond pure economic productivity, while still acknowledging the importance of contribution and effort.

The primacy of freedom rights over security measures becomes increasingly critical as AI surveillance capabilities expand. While the promise of perfect security through total surveillance might be tempting, it fundamentally undermines the conditions necessary for human flourishing. The real challenge lies in developing security systems that protect society while preserving essential privacy and autonomy.

Education as an investment in human capital, rather than a profit center, represents a crucial shift in how we view human development. By replacing predatory financial structures with systems that align individual and societal interests, we can unlock human potential while ensuring sustainable returns on educational investment.

Beyond Convention: Challenging the Overton Window

Finally, we reach positions that currently lie outside mainstream discourse but may prove crucial for navigating the challenges ahead.

The selective application of prenatal genomics, focused strictly on disease prevention, represents a careful balance between technological capability and ethical constraint. While the specter of eugenics looms large, categorical rejection of these technologies might condemn future generations to preventable suffering.

As transhumanist technologies become viable, ensuring universal access while preserving bodily autonomy becomes crucial. Without careful governance, these technologies could create unprecedented levels of inequality. A comprehensive charter of rights and responsibilities could help prevent the emergence of a biological caste system while preserving individual choice.

Perhaps most controversially, the establishment of an unelected AI oversight council, operating in the shadows but bound by strict codes, represents an acknowledgment that some challenges require solutions outside traditional democratic frameworks. This council’s mandate would extend beyond mere regulation to include civilization-level contingency planning and management of fundamental resources, including energy consumption relative to human comprehension of superintelligent needs. This is similar to the idea of the Atomic Priesthood by Thomas A. Sebeok.

The Path Forward

These values form a gradient from the widely accepted to the potentially controversial, but all share a common thread: they attempt to preserve and enhance human agency and dignity in the face of unprecedented technological change. As we navigate the challenges ahead, this framework provides guidelines for evaluating and directing our technological development.

The implementation of these values will require careful balance and continuous adjustment. Some may prove impractical, others insufficient. But by articulating them clearly and examining their implications, we begin the crucial work of developing ethical frameworks capable of guiding us through the transformative changes ahead.

Conclusion: Navigating the Great Transition

As we approach this unprecedented inflection point, our relationship with artificial intelligence will shape not just humanity’s future, but potentially the future of consciousness itself in our corner of the cosmos. The convergence of AI, space exploration, biotechnology, and our expanding understanding of intelligence presents both extraordinary opportunities and existential challenges.

The path forward requires synthesizing human and artificial intelligence rather than choosing between them. As we venture into these uncharted territories, we’ll need new frameworks for understanding and perhaps entirely new modes of existence. Through careful thought and bold action, we can work toward ensuring this great transition leads not to our obsolescence, but to our transcendence.

DISCLAIMER: While I wrote the mainlines and carefully crafted the content of this post, Claude Sonnet 3.5, Opus 3.0 and Perplexity DeepSearch were used to improve different factors.

Human time spent: 9 hours

AI/human workload estimation: 75%

AI/human research estimation: 15%

AI/human creativity estimation: 65%

AI outputs selected/deleted estimation: 25%

Non-scientific token spent estimation: 10k-15k Q1-2025

Human time saved estimation: 8-12 hours